Generative AI became one of the hottest investment areas last year, with more than $18.3bn in venture capital funding going to fund startups in this area in 2023. But even as investors jostle to back generative AI startups, regulatory uncertainties are gathering over the sector. There are at least nine class-action lawsuits against AI companies over copyright infringements. The New York Times, for example, is suing OpenAI, the creator of ChatGPT, and Microsoft, for using the newspaper’s journalism for training its large language model.

Some of that is spooking investors.

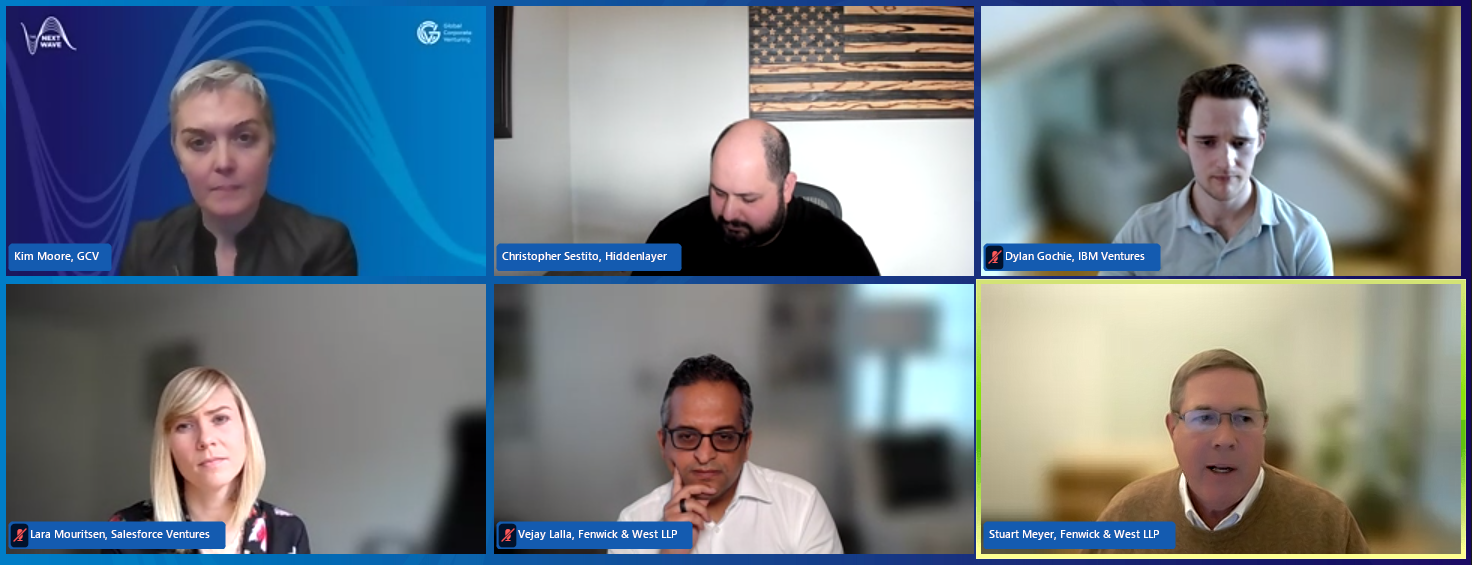

“We’ve had investors recently call us and say: ‘Should we not be investing in foundational models at this point? Or platforms that are getting a lot of data that may be copyrighted?’,” says Vejay Lalla, partner at law firm Fenwick & West.

At the same time, government legislation on artificial intelligence is being developed. US President Joe Biden issued an executive order on the secure development of AI last October, and the text of the European Union’s AI Act is in the process of being finalised, banning practices such as remote biometric identification and use of AI to manipulate behaviour.

Stuart Meyer, partner at Fenwick & West, says that after a frenetic year of launching generative AI products in 2023, 2024 is a moment when companies are pausing to evaluate how well these systems will comply with evolving regulatory standards.

“Last year was all about getting something out there into the world. And there’s a moment of repose, where people are saying, how do we do this in a manner that’s not going to be looked at poorly?” he says.

And while any new technology has to deal with regulation, generative AI has come under political and legal scrutiny uniquely fast, because it has generated so much hype among the general public.

“This technology has gone from outside mainstream to a very mainstream focus with a tonne of individual attention,” says Lara Mouritsen, head of legal at Salesforce Ventures. “We’ve seen regulators move incredibly quickly and the discussion has gone from one to 100 really quickly for this round of technological development.”

Mouritsen, Lalla and Meyer were part of a Global Corporate Venturing webinar discussing how investors and generative AI startups should navigate this period of regulatory flux. They were joined by Dylan Gochie-Amaro, senior associate at IBM Ventures, and Chris Sestito, CEO and founder of HiddenLayer, a startup providing machine learning-based IT security solutions.

Here are nine point to take away from the discussion.

1. Look for nimble startup teams that can deal with uncertainty and change

Investors always say the quality of the founding team is one of the key things they look at when deciding whether to back a startup or not. But this especially true in an environment full of many unknowns, where it is almost certain that teams will have to pivot and adapt as rules shift. A past track record of operating in an area with tricky regulation is a big bonus right now.

“In an environment where regulations are so dynamic and changing we are tripling down on investing in the right founders,” says Gochie-Amaro. “We try to understand how the team has manoeuvred their organisations in the past through different regulatory risks.”

Investors aren’t expecting young companies to have all the answers, but they want to see evidence of a nimble team. “Something we’re looking for now is ability to move really quickly,” says Mouritsen.

2. Gen AI startups don’t have to be fully compliant from day one. But they need to have a roadmap to compliance.

Young companies have lot of calls on their cash resources, and even lawyers recognise that it is simply not realistic to spend all of this on becoming regulation-proof.

“We’re not telling clients to spend all their initial cash on compliance. We’re giving them kind of a roadmap to think about how they get compliant over the long term,” says Lalla.

Meyer says that young startups need to show that they have thought about regulation and have a plan for what they would do to meet it in the future, given more time and funding.

And while there are various different regulations and frameworks coming out from a variety of different bodies, the good news is that you don’t have to try to follow all of them at once, at least in the beginning. Just start with one.

“Whichever one you choose, it will put you in the right direction. You may have to do some adjustments as things evolve. But showing that you’re complying with any of these things is important,” says Meyer.

3. If you do pick just one regulator to follow, go with the strictest

In terms of strictness, following EU guidance is usually a good bet.

“The EU AI Act is probably the primary one that we’re seeing investors talk about and think about, not only for the operations of their business, but from an investment or acquisition standpoint as well,” says Lalla. “It’s the most developed framework and will have real revenue consequences, depending on the level of enforcement, similar to GDPR [General Data Protection Regulation]. It’s going to be a model for other international regimes.”

In terms of privacy regulations, he adds, the Californian privacy regulations are some of the most exacting so would be the ones to follow on those aspects.

4. Despite the hype, AI regulation isn’t going wildly different from other tech regulation

Although the risks of generative AI have been making the headlines recently, the actual regulation around the technology is likely to follow the same pattern as we have seen around other innovations.

“Judges are very wary of completely stopping technology development, so you’re going to have some sort of balanced approach,” says Lalla.

HiddenLayer’s Sestito says that its team is looking at parallels in other areas.

“We’re reflecting on other recent technological shifts and regulation that ended up being imposed upon things like endpoint networks and data moving to the cloud. Ultimately, regulation ends up at a reasonable place, even though it’s even a little earlier on now. We expect regulation to really look very similar to other technological assets,” he says.

General Data Protection Regulation, in particular, is a good example to look at, he says. Much of the regulation in any technology shift, he says, is about keeping companies accountable.

“As a security vendor, we looked at things like data integrity, what that’s going to mean for things like chain of custody for model provenance,” he says.

5. There are steps you can take on generative AI copyright issues now

While the court cases over alleged copyright violations by generative AI companies have yet to conclude, startups and their investors are already taking steps to protect themselves. Many generative AI companies are offering limited indemnification to protect the users of their tools from IP claims. But Lalla advises people to read the small print on those carefully, as they will contain exclusions that could catch you out.

In general, he says, it is worth evaluating generative AI tools according to how much transformation happens between input and output.

“If you are putting something into your model and something very similar is coming out, you have a problem on your hands, but the more transformative the content outputs are, the more you will have defences,” he says.

6. Regulation isn’t the only problem for generative AI. You also have to think about customer perception.

If nobody is buying your product, complying with regulation or not becomes a secondary problem.

“From the company’s perspective it’s really important to think about what customers are concerned about now,” says Meyer. There is already some consumer distrust of generative AI technologies, and companies will have to work to dispel that.

An annual Cisco customer survey indicated that some 60% of consumers have lost trust in organisations over their AI practices. Some 91% of organisations said they needed to do more to build customer confidence over their use of AI.

It is particularly a problem for smaller startups, says Mouritsen. “One of the elements that is really difficult as a young company is establishing your trustworthiness in the market,” she says.

7. Also consider political risk.

Amid heightened geopolitical tensions, it is also worth being aware that generative AI tools are likely to be caught in the crossfire.

“There are nation states that are viewing generative AI as a powerful tool to advance various agendas and we have to be careful to make sure that we’re not investing in companies that are empowering that,” he says.

HiddenLayer, for example, is trying to stay ahead of any complications by vetting its customer base.

“We’ve committed to not do any business in any country that falls below the median in the world freedom index,” says Sestito. “There are things that you can do to help show others that the ethical component is something that you’re going to uphold.”

8. If you don’t think about regulation from the start it may get in the way of an exit.

The bottom line for investors is that, for a startup to list on the public market or be acquired by a public company, it will need to get regulatory compliance in order. So, it is better to think of this from the start.

“If you want to be acquired by a sophisticated large public company, you’re going to have to have taken all the steps that you can mitigate regulatory risk or legal risk,” says Mouritsen. “If you’ve never looked at these things, that acquisition opportunity might not come. Or if it does, it’s going to require so much rebuilding that it will slow.”

9. Corporate investors may have some advantages in helping steer startups through the regulation.

Here’s the good news. Corporate investors are in a good position to help steer the startups they invest in through regulatory hurdles, even when there is lack of clarity.

“We have the benefit of having some great experts around us. Bringing in support from your corporate can really be your superpower. Our IP team is already dealing with the shifts in IP law and are able to relay information about them when a decision comes out,” says Mouritsen.

Watch the full webinar replay here:

This webinar is part of GCV’s The Next Wave series of webinars. We run a webinar on the second Wednesday of every month, alternating between advice for CVC practitioners and deep dives into specific investment areas. Our next webinar will be Venture Building – How to avoid the common failures on February 14 2024. Register here to secure your place.